Tutorials

IncrementalInference.jl ContinuousScalar

The application of this tutorial is presented in abstract from which the user is free to imagine any system of relationships: For example, a robot driving in a one dimensional world; or a time traveler making uncertain jumps forwards and backwards in time. The tutorial implicitly shows a multi-modal uncertainty can be introduced from non-Gaussian measurements, and then transmitted through the system. The tutorial also illustrates consensus through an additional piece of information, which reduces all stochastic variable marginal beliefs to unimodal only beliefs. This tutorial illustrates how algebraic relations (i.e. residual functions) between multiple stochastic variables are calculated, as well as the final posterior belief estimate, from several pieces of information. Lastly, the tutorial demonstrates how automatic initialization of variables works.

This tutorial requires RoME.jl and RoMEPlotting packages be installed. In addition, the optional GraphViz package will allow easy visualization of the FactorGraph object structure.

To start, the two major mathematical packages are brought into scope.

using IncrementalInferenceGuidelines for developing your own functions are discussed here in Adding Variables and Factors, and we note that mechanizations and manifolds required for robotic simultaneous localization and mapping (SLAM) has been tightly integrated with the expansion package RoME.jl.

The next step is to describe the inference problem with a graphical model with any of the existing concrete types that inherit from <: AbstractDFG. The first step is to create an empty factor graph object and start populating it with variable nodes. The variable nodes are identified by Symbols, namely :x0, :x1, :x2, :x3.

# Start with an empty factor graph

fg = initfg()

# add the first node

addVariable!(fg, :x0, ContinuousScalar)

# this is unary (prior) factor and does not immediately trigger autoinit of :x0.

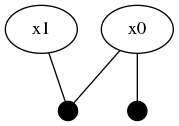

addFactor!(fg, [:x0], Prior(Normal(0,1)))Factor graphs are bipartite graphs with factors that act as mathematical structure between interacting variables. After adding node :x0, a singleton factor of type Prior (which was defined by the user earlier) is 'connected to' variable node :x0. This unary factor is taken as a Distributions.Normal distribution with zero mean and a standard devitation of 1. Graphviz can be used to visualize the factor graph structure, although the package is not installed by default – $ sudo apt-get install graphviz. Furthermore, the drawGraph member definition is given at the end of this tutorial, which allows the user to store the graph image in graphviz supported image types.

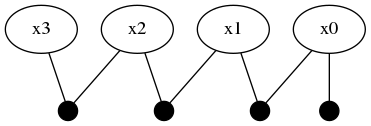

drawGraph(fg, show=true)The two node factor graph is shown in the image below.

Graph-based Variable Initialization

Automatic initialization of variables depend on how the factor graph model is constructed. This tutorial demonstrates this behavior by first showing that :x0 is not initialized:

@show isInitialized(fg, :x0) # falseWhy is :x0 not initialized? Since no other variable nodes have been 'connected to' (or depend) on :x0 and future intentions of the user are unknown, the initialization of :x0 is deferred until the latest possible moment. IncrementalInference.jl assumes that the user will generally populate new variable nodes with most of the associated factors before moving to the next variable. By delaying initialization of a new variable (say :x0) until a second newer uninitialized variable (say :x1) depends on :x0, the IncrementalInference algorithms hope to then initialize :x0 with the more information from previous and surrounding variables and factors. Also note that graph-based initialization of variables is a local operation based only on the neighboring nodes – global inference occurs over the entire graph and is shown later in this tutorial.

By adding :x1 and connecting it through the LinearRelative and Normal distributed factor, the automatic initialization of :x0 is triggered.

addVariable!(fg, :x1, ContinuousScalar)

# P(Z | :x1 - :x0 ) where Z ~ Normal(10,1)

addFactor!(fg, [:x0, :x1], LinearRelative(Normal(10.0,1)))

@show isInitialized(fg, :x0) # trueNote that the automatic initialization of :x0 is aware that :x1 is not initialized and therefore only used the Prior(Normal(0,1)) unary factor to initialize the marginal belief estimate for :x0. The structure of the graph has now been updated to two variable nodes and two factors.

Global inference requires that the entire factor graph be initialized before the numerical belief computation algorithms can be performed. Notice how the new :x1 variable is not yet initialized:

@show isInitialized(fg, :x1) # falseVisualizing the Variable Probability Belief

The RoMEPlotting.jl package allows visualization (plotting) of the belief state over any of the variable nodes. Remember the first time executions are slow given required code compilation, and that future versions of these package will use more precompilation to reduce first execution running cost.

using RoMEPlotting

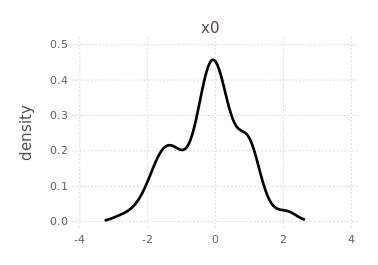

plotKDE(fg, :x0)

By forcing the initialization of :x1 and plotting its belief estimate,

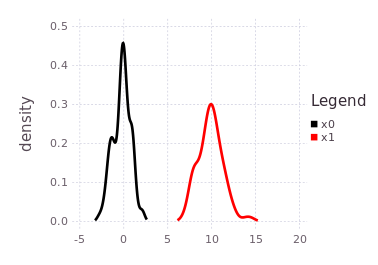

initAll!(fg)

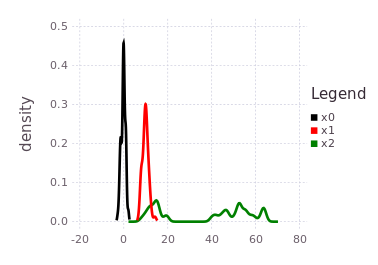

plotKDE(fg, [:x0, :x1])the predicted influence of the P(Z| X1 - X0) = LinearRelative(Normal(10, 1)) is shown by the red trace.

The red trace (predicted belief of :x1) is noting more than the approximated convolution of the current marginal belief of :x0 with the conditional belief described by P(Z | X1 - X0).

Defining A Mixture Relative on ContinuousScalar

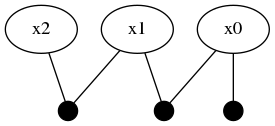

Another ContinuousScalar variable :x2 is 'connected' to :x1 through a more complicated MixtureRelative likelihood function.

addVariable!(fg, :x2, ContinuousScalar)

mmo = Mixture(LinearRelative,

(hypo1=Rayleigh(3), hypo2=Uniform(30,55)),

[0.4; 0.6])

addFactor!(fg, [:x1, :x2], mmo)

The mmo variable illustrates how a near arbitrary mixture probability distribution can be used as a conditional relationship between variable nodes in the factor graph. In this case, a 40%/60% balance of a Rayleigh and truncated Uniform distribution which acts as a multi-modal conditional belief. Interpret carefully what a conditional belief of this nature actually means.

Following the tutorial's practical example frameworks (robot navigation or time travel), this multi-modal belief implies that moving from one of the probable locations in :x1 to a location in :x2 by some processes defined by mmo=P(Z | X2, X1) is uncertain to the same 40%/60% ratio. In practical terms, collapsing (through observation of an event) the probabilistic likelihoods of the transition from :x1 to :x2 may result in the :x2 location being at either 15-20, or 40-65-ish units. The predicted belief over :x2 is illustrated by plotting the predicted belief (green trace), after forcing initialization.

initAll!(fg)

plotKDE(fg, [:x0, :x1, :x2])

Adding one more variable :x3 through another LinearRelative(Normal(-50,1))

addVariable!(fg, :x3, ContinuousScalar)

addFactor!(fg, [:x2, :x3], LinearRelative(Normal(-50, 1)))expands the factor graph to to four variables and four factors.

This part of the tutorial shows how a unimodal likelihood (conditional belief) can transmit the bimodal belief currently contained in :x2.

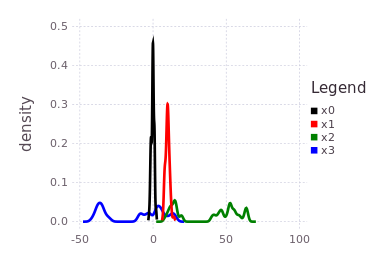

initAll!(fg)

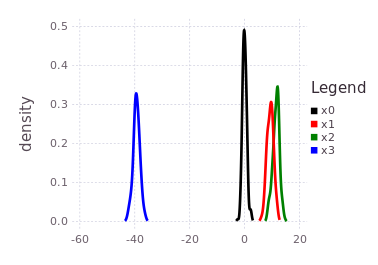

plotKDE(fg, [:x0, :x1, :x2, :x3])Notice the blue trace (:x3) is a shifted and slightly spread out version of the initialized belief on :x2, through the convolution with the conditional belief P(Z | X2, X3).

Global inference over the entire factor graph has still not occurred, and will at this stage produce roughly similar results to the predicted beliefs shown above. Only by introducing more information into the factor graph can inference extract more precise marginal belief estimates for each of the variables. A final piece of information added to this graph is a factor directly relating :x3 with :x0.

addFactor!(fg, [:x3, :x0], LinearRelative(Normal(40, 1)))Pay close attention to what this last factor means in terms of the probability density traces shown in the previous figure. The blue trace for :x3 has two major modes, one that overlaps with :x0, :x1 near 0 and a second mode further to the left at -40. The last factor introduces a shift LinearRelative(Normal(40,1)) which essentially aligns the left most mode of :x3 back onto :x0.

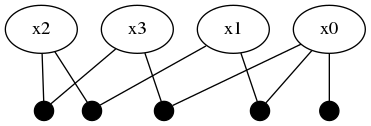

This last factor forces a mode selection through consensus. By doing global inference, the new information obtained in :x3 will be equally propagated to :x2 where only one of the two modes will remain.

Global inference is achieved with local computation using two function calls, as follows.

tree = solveTree!(fg)

# and visualization

plotKDE(fg, [:x0, :x1, :x2, :x3])The resulting posterior marginal beliefs over all the system variables are:

It is import to note that although this tutorial ends with all marginal beliefs having near Gaussian shape and are unimodal, that the package supports multi-modal belief estimates during both the prediction and global inference processes. In fact, many of the same underlying inference functions are involved with the automatic initialization process and the global multi-modal iSAM inference procedure. This concludes the ContinuousScalar tutorial particular to the IncrementalInference package.